Systems Engineering for Intelligent Transportation Systems

This document is intended to help you understand how systems engineering can be used throughout the ITS project life cycle. Chapters 4 and 5 present two different types of processes that support systems engineering:

Relationship to Traditional Transportation Processes

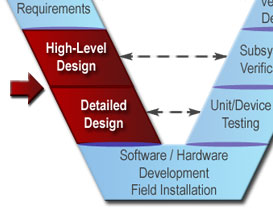

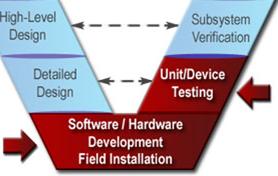

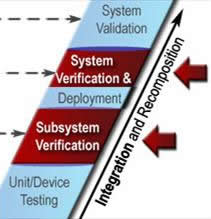

ITS projects are identified and funded through transportation planning and programming/budgeting processes in each state, planning region (e.g., metropolitan planning area), and agency. The "V" diagram and the systems engineering process begin once a need for an ITS project has been identified. The early steps in the "V" define the project scope and determine the feasibility and acceptability as well as the costs and benefits of the project. These early steps actually support planning and programming/budgeting since they are intended to identify high-level risks, benefits, and costs and to determine if the ITS project is a good investment. The latter steps support project implementation, then transition into operations and maintenance, changes and upgrades, and ultimate retirement or replacement of the system. (The systems engineering "V" is placed in context with the traditional transportation project life cycle in Section 6.1.)

Technical Documentation

Each step of the process that is described in this chapter results in one or more technical outputs. This documentation is used in subsequent steps in the process and provides a critical documentation trail for the project. The documentation that is discussed in this chapter is identified in Table 1, which provides a bird's-eye view of where it fits in the "V". Several resources provide good descriptions and templates for this documentation.7 Note that not every ITS project will require every document listed in the table. (More information on tailoring is provided later in this chapter and in Section 6.2.3.)

About the Examples

This chapter is illustrated with real examples that show how different agencies have used the systems engineering process for their ITS projects. These real examples aren't perfect and shouldn't be taken as the only approach, or even the best approach, for accomplishing a particular step. As time goes by and we gain experience using systems engineering on ITS projects, many more examples will become available.

Table 1: Technical Documentation in the "V" Systems Engineering Process

| Documentation | Chapter / Process Step | 4.1 Using the Regional ITS Architecture | 4.2 Feasibility Study / Concept Exploration | 4.3 Concept of Operations | 4.4 System Requirements | 4.5 System Design | 4.6 SW/HW Development & Testing | 4.7 Integration & Verification | 4.8 Initial Deployment | 4.9 System Validation | 4.10 Operations and Maintenance | 4.11 Retirement / Replacement |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Relevant portion of Reg ITS Arch | C | U | U | U | ||||||||

| Feasibility Study | C | U | ||||||||||

| Concept of Operations | C | U | ||||||||||

| System Validation Plan | C | U | ||||||||||

| System Requirements Document | C | U | U | U | U | U | U | U | ||||

| System Verification Plan | C | U | ||||||||||

| Traceability Matrix | C | U | U | U | U | |||||||

| System Acceptance Plan | C | U | ||||||||||

| High-Level (Architectural) Design | CU | U | ||||||||||

| Detailed Design Specifications | C | U | ||||||||||

| Interface Specifications | C | U | U | |||||||||

| Subsystem Verification Plans | C | U | ||||||||||

| Integration Plan | C | U | ||||||||||

| Subsystem Acceptance Plan | C | U | ||||||||||

| Unit/Device Test Plan | C | U | ||||||||||

| SW/HW Development Plans | CU | |||||||||||

| Verification Procedures | CU | |||||||||||

| Delivery & Installation Plan | CU | |||||||||||

| Transition Plan | CU | |||||||||||

| O&M Plan and Procedures | C | U | ||||||||||

| System Validation Procedures | CU | |||||||||||

| System Retirement Plan | CU | |||||||||||

| C: Create documentation U: Primary Use/Update of the documentation | ||||||||||||

Using the Regional ITS

Architecture

Using the Regional ITS

ArchitectureIn this step: The portion of the regional ITS architecture that is related to the project is identified. Other artifacts of the planning and programming processes that are relevant to the project are collected and used as a starting point for project development. This is the first step in defining your ITS project.

| OBJECTIVES |

|

|---|---|

| INPUT

Sources of Information |

|

| PROCESS

Key Activities |

|

| OUTPUT

Process Results |

|

| Review

Proceed only if you have: |

|

The regional ITS architecture provides a good starting point for systems engineering analyses that are performed during ITS project development. It provides region-level information that can be used and expanded in project development.

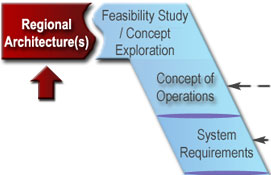

When an ITS project is initiated, there is a natural tendency to focus on the programmatic and technical details and to lose sight of the broader regional context. Using the regional ITS architecture as a basis for project implementation provides this regional context as shown in Figure 8. It provides each project sponsor with the opportunity to view their project in the context of surrounding systems. It also prompts the sponsor to think about how the project fits within the overall transportation vision for the region. Finally, it identifies the integration opportunities that should be considered and provides a head start for the systems engineering analysis.

Figure 8: Regional ITS Architecture Framework for Integration

The regional

ITS architecture is a tool that is used in transportation planning,

programming, and project implementation for ITS. It is a framework for

institutional agreement and technical integration for ITS projects and is the

place to start when defining the basic scope of a project.

The regional

ITS architecture is a tool that is used in transportation planning,

programming, and project implementation for ITS. It is a framework for

institutional agreement and technical integration for ITS projects and is the

place to start when defining the basic scope of a project.

The regional ITS architecture is the first step in the "V" because the best opportunity for its use is at the beginning of the development process. The architecture is most valuable as a scoping tool that allows a project to be broadly defined and shown in a regional context. The regional ITS architecture step and the concept exploration step that is described in the next section may iterate since different concepts may have different architecture mappings. The initial architecture mapping may continue to be refined and used as the Concept of Operations and system requirements are developed.

The

Regional ITS Architecture Guidance Document provides detailed guidance for

regional ITS architecture development, use, and maintenance. (Version 2 of

this document provides detailed guidance for using a regional ITS architecture

to support project implementation.)

The

Regional ITS Architecture Guidance Document provides detailed guidance for

regional ITS architecture development, use, and maintenance. (Version 2 of

this document provides detailed guidance for using a regional ITS architecture

to support project implementation.)

Initial use of the regional ITS architecture requires a few basic activities: locating the right architecture, identifying the portion of the architecture that applies to your project, and notifying the architecture maintainer of any required regional architecture changes. None of these tasks is particularly time consuming – the basic extraction of information can be done in an afternoon, even for a fairly complex project, if you are knowledgeable about the regional ITS architecture. Of course, it can be time consuming to climb the learning curve, and coordinating and building consensus on the scope of the project will require time and effort. Each of the key activities is described in the following paragraphs.

In the event that no regional ITS architecture exists at the time that an ITS project is initiated, coordinate with the FHWA Division/FTA Regional Office on starting a regional ITS architecture effort. In the interim, a project-level architecture should be developed based on the National ITS Architecture8 to support the ITS project.

The systems engineering analysis

requirements identified in FHWA Rule 940.11/FTA Policy Section VI require

identification of the portion of the regional ITS architecture that is

implemented by each ITS project that uses federal funds. If a regional ITS

architecture does not exist, then the portion of the National ITS Architecture

that will be implemented by the project must be identified.

The systems engineering analysis

requirements identified in FHWA Rule 940.11/FTA Policy Section VI require

identification of the portion of the regional ITS architecture that is

implemented by each ITS project that uses federal funds. If a regional ITS

architecture does not exist, then the portion of the National ITS Architecture

that will be implemented by the project must be identified.

You

should build consensus around the fundamental project scope decisions that are

made as the relevant portions of the regional ITS architecture are identified.

One good approach is to create a context diagram that shows the ITS system to

be implemented in the middle of the diagram surrounded by all other potentially

interfacing systems in the region. For example, Figure 9 is a context diagram

for the MaineDOT Communications Center. A context diagram can be used to

discuss integration opportunities that should be considered in this project and

in future projects. A discussion like this puts the ITS project in context and

raises awareness of future ITS integration opportunities. It also may

highlight regional ITS architecture issues that should be addressed.

You

should build consensus around the fundamental project scope decisions that are

made as the relevant portions of the regional ITS architecture are identified.

One good approach is to create a context diagram that shows the ITS system to

be implemented in the middle of the diagram surrounded by all other potentially

interfacing systems in the region. For example, Figure 9 is a context diagram

for the MaineDOT Communications Center. A context diagram can be used to

discuss integration opportunities that should be considered in this project and

in future projects. A discussion like this puts the ITS project in context and

raises awareness of future ITS integration opportunities. It also may

highlight regional ITS architecture issues that should be addressed.

In almost every case, the regional ITS architecture will identify potential integration opportunities that will not be included in the current project. Specific integration options may be deferred for many reasons – agencies on both sides of the interface may not be ready, there may not be sufficient funding or time available to implement everything, supporting infrastructure may not yet be completed, a necessary standard may not be available, implementing too much at once may incur too much complexity/risk, etc.

Even if they are deferred, it is important to account for future integration options in the current project design. The ultimate goal is to make ITS deployment as economical as possible by considering how this project will support future projects over time. To support this objective, future integration options that may impact the project design should be identified and considered in the project development. For example, additional stakeholders may be involved in the current project to ensure that future interface requirements are identified and factored into the current project design.

Each

region should define a mechanism that allows the project team to provide

comments on the architecture with minimal time investment. Project teams that

use the architecture will be among the most significant sources for regional

ITS architecture maintenance changes, and the region should strive to

facilitate this feedback. If your region does not have such a mechanism, consult

the Regional ITS Architecture Guidance Document for more information on

facilitating architecture use and maintenance in your region.

Each

region should define a mechanism that allows the project team to provide

comments on the architecture with minimal time investment. Project teams that

use the architecture will be among the most significant sources for regional

ITS architecture maintenance changes, and the region should strive to

facilitate this feedback. If your region does not have such a mechanism, consult

the Regional ITS Architecture Guidance Document for more information on

facilitating architecture use and maintenance in your region.

The first output of this step is the subset of the regional ITS architecture for the ITS project. While the Rule/Policy requires a subset of the regional ITS architecture to be identified, it does not define the components that should be included. You should consult local guidelines or requirements to help make this determination. In most cases, the following components will precisely define the scope of the project: (1) stakeholders, (2) inventory elements, (3) functional requirements, and (4) information flows.

These four components define the system(s) that will be created or impacted by the project, the affected stakeholders, the functionality that will be implemented, and the interfaces that will be added or updated. Other components may be identified, including market packages, roles and responsibilities, relevant ITS standards, and agreements. For very large ITS projects, this might be several pages of information. For a small ITS project, this might fit on a single page. The information that is extracted will actually be used in the concept exploration, Concept of Operations, requirements, and design steps that follow.

The Turbo

Architecture software tool can be used to quickly and accurately define an ITS

project architecture if the regional ITS architecture was developed with

Turbo. Turbo Architecture can be used to generate diagrams and reports that fully

document the portion of the regional ITS architecture that will be implemented

by the project. Turbo Architecture can also be used to develop a project ITS

architecture based on the National ITS Architecture if a regional ITS

architecture does not exist. The Turbo Architecture software can be obtained

from McTrans by visiting their website at http://www-mctrans.ce.ufl.edu/featured/turbo/.

The Turbo

Architecture software tool can be used to quickly and accurately define an ITS

project architecture if the regional ITS architecture was developed with

Turbo. Turbo Architecture can be used to generate diagrams and reports that fully

document the portion of the regional ITS architecture that will be implemented

by the project. Turbo Architecture can also be used to develop a project ITS

architecture based on the National ITS Architecture if a regional ITS

architecture does not exist. The Turbo Architecture software can be obtained

from McTrans by visiting their website at http://www-mctrans.ce.ufl.edu/featured/turbo/.

If you don't

find what you need in the regional ITS architecture, then you should add the

missing or changed items to your architecture subset and highlight them so it

is clear what you changed. For example, if there is a system in your project

that is not represented in the regional ITS architecture, add it to your

architecture subset and highlight it. The highlighted changes serve two

purposes: they allow you to move forward with an augmented architecture subset

that you can use in the next steps of the process, and they provide the basis

for your feedback for regional ITS architecture maintenance.

If you don't

find what you need in the regional ITS architecture, then you should add the

missing or changed items to your architecture subset and highlight them so it

is clear what you changed. For example, if there is a system in your project

that is not represented in the regional ITS architecture, add it to your

architecture subset and highlight it. The highlighted changes serve two

purposes: they allow you to move forward with an augmented architecture subset

that you can use in the next steps of the process, and they provide the basis

for your feedback for regional ITS architecture maintenance.

The second output of this step – feedback to the regional ITS architecture maintenance team – is just as important as the first output. Submit any recommended changes using the mechanism defined for your region in the regional ITS architecture maintenance plan.

The subset of the regional ITS architecture that is included in the project can be shown in a series of simple tables and/or a diagram from Turbo Architecture, as shown in Figure 9. This figure identifies the inventory elements and interfaces that will be implemented by a MaineDOT Dynamic Message Sign (DMS) project in which several signs will be installed in Portland, Maine, along with a central control system with interfaces to a number of other centers. Functional requirements that are relevant to the project were also extracted, as shown in Table 2.

Figure 9: Example: MaineDOT DMS Project Architecture Subset

Table 2: MaineDOT DMS Project Functional Requirements (Partial List)

| Element | Functional Area | ID | Requirement |

|---|---|---|---|

| MaineDOT Communications Center | TMC Traffic Information Dissemination | 1 | The Center shall remotely control DMS for dissemination of traffic and other information to drivers. |

| MaineDOT Communications Center | TMC Traffic Information Dissemination | 3 | The Center shall collect operational status for the driver information systems equipment (DMS, HAR, etc.). |

| MaineDOT Communications Center | TMC Traffic Information Dissemination | 4 | The Center shall collect fault data for the driver information systems equipment (DMS, HAR, etc.) for repair. |

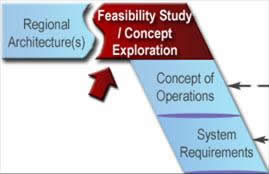

In

this step: A business case is made for the project.

Technical, economic, and political feasibility is assessed; benefits and costs

are estimated; and key risks are identified. Alternative concepts for meeting

the project's purpose and need are explored, and the superior concept is

selected and justified using trade study techniques.

In

this step: A business case is made for the project.

Technical, economic, and political feasibility is assessed; benefits and costs

are estimated; and key risks are identified. Alternative concepts for meeting

the project's purpose and need are explored, and the superior concept is

selected and justified using trade study techniques.

| OBJECTIVES |

|

|---|---|

| INPUT

Sources of Information |

|

| PROCESS

Key Activities |

|

| OUTPUT

Process Results |

|

| Review

Proceed only if you have: |

|

In this step, the proposed ITS project is assessed to determine whether it is technically, economically, and operationally viable. Major concept alternatives are considered, and the most viable option is selected and justified. While the concept exploration should be at a fairly high level at this early stage, enough technical detail must be included to show that the proposed concept is workable and realistic. The feasibility study provides a basis for understanding and agreement among project decision makers – project management, executive management, and any external agencies that must support the project, such as a regional planning commission.

The

Rule/Policy requires the systems engineering analysis to include an analysis of

alternative system configurations and technology options. The focus of this

Rule/Policy requirement is on design decisions that are made later in the

process, but a fundamental analysis of basic systems configurations is

performed in this step.

The

Rule/Policy requires the systems engineering analysis to include an analysis of

alternative system configurations and technology options. The focus of this

Rule/Policy requirement is on design decisions that are made later in the

process, but a fundamental analysis of basic systems configurations is

performed in this step.

It is easy

to confuse the concept exploration that is performed in this step with the

Concept of Operations that is developed in the next step. Concept

exploration is a broad assessment of fundamentally different alternatives –

for example, a new electronic toll facility versus additional conventional

lanes. The alternatives would have dramatically different concepts of

operations, so it is important to select a proposed concept before developing a

Concept of Operations. Different alternatives may also have different regional

ITS architecture mappings, so this step may iterate with the previous regional

ITS architecture step.

It is easy

to confuse the concept exploration that is performed in this step with the

Concept of Operations that is developed in the next step. Concept

exploration is a broad assessment of fundamentally different alternatives –

for example, a new electronic toll facility versus additional conventional

lanes. The alternatives would have dramatically different concepts of

operations, so it is important to select a proposed concept before developing a

Concept of Operations. Different alternatives may also have different regional

ITS architecture mappings, so this step may iterate with the previous regional

ITS architecture step.

The process is driven by the project vision, goals, and objectives, and by the needs for the project that were identified through the transportation planning process. It starts by identifying a broad range of potential concepts that satisfy the project need(s). The concepts are compared relative to measures that assess the relative benefits, costs, and risks of each alternative. Project stakeholders must be involved to establish the evaluation criteria, verify that all viable alternative concepts are considered, and make sure there is consensus on the selected alternative. The recommendations provide a documented rationale for the selected project approach and an assessment of its feasibility. The process is identical to a feasibility study done for large roadway and transit projects.

The alternatives analysis that is performed during a feasibility analysis uses a basic trade study technique, shown in Figure 10, that will be repeated many times during the project life cycle. At this early concept exploration step, the alternatives are fundamental choices, such as to maintain the existing facility ("do nothing"), build a new road, or add ITS technology to the existing facility. During design, the alternatives are design decisions, such as whether signs should be located at location A, B, or C. During construction, alternatives may have to do with optimizing closures while the work is performed. At each step, a set of alternatives is identified and analyzed from technical, economic, and operational perspectives.

Figure 10: Concept Exploration Uses Basic Trade Study Techniques

A

feasibility study should be conducted only when a broad analysis is needed

before the commitment of development resources. Some states require a

feasibility study for certain ITS projects. A feasibility study is typically

not required for smaller, incremental ITS projects where there are not

fundamentally different approaches for implementation and where feasibility is

not in question – for example, a project that adds DMS to an existing system.

In other cases, a broad exploration of alternatives is not warranted but a

cost-benefit study is needed to make the business case for the project.

A

feasibility study should be conducted only when a broad analysis is needed

before the commitment of development resources. Some states require a

feasibility study for certain ITS projects. A feasibility study is typically

not required for smaller, incremental ITS projects where there are not

fundamentally different approaches for implementation and where feasibility is

not in question – for example, a project that adds DMS to an existing system.

In other cases, a broad exploration of alternatives is not warranted but a

cost-benefit study is needed to make the business case for the project.

Here at the very beginning of project development, the unknowns will certainly outnumber the knowns. Without a Concept of Operations or requirements, many assumptions will have to be made. It is important to educate the group performing this assessment on the concept exploration process and to set a schedule – otherwise, this stage could be an open-ended process since there's always something new over the horizon. The process activities are:

Based on the statement of the problem, establish cost constraints and any other constraints that will be used to limit the acceptable alternatives. Determine how success will be measured – the degree to which the project will solve the stated problem or realize the identified opportunity. These measures should be included in the criteria that will be used to evaluate the alternative concepts. Also, do a preliminary risk analysis to identify issues and obstacles that may affect the project, and develop evaluation criteria that will measure the sensitivity of each candidate solution to each of the risks.

It is

a good idea to define evaluation criteria before alternative concepts are

enumerated. By developing the criteria first, you reduce the risk of

intuitively settling on an alternative and then subconsciously biasing the

criteria toward the preferred alternative. It is important to develop the

criteria so that they are not preferential to one of the concepts.

It is

a good idea to define evaluation criteria before alternative concepts are

enumerated. By developing the criteria first, you reduce the risk of

intuitively settling on an alternative and then subconsciously biasing the

criteria toward the preferred alternative. It is important to develop the

criteria so that they are not preferential to one of the concepts.

If you find that you are identifying specific products or vendors as the alternative solutions, you are being too specific. A trade comparison of products or vendors occurs much later in the process based on defined requirements during design. The alternatives here should be high-level concepts – for example, instrumentation with traffic detectors versus use of traffic probes to support traffic data collection for a corridor. Alternatives may also reflect life-cycle options, such as leased versus owned equipment, contracted versus in-house staffing, etc. You may have to establish a basic architecture and a minimal strawman design to support the analysis, but do no more than is necessary to support the evaluation.

A

common pitfall in developing a concept exploration or any trade study

comparison is the premature selection of an alternative early in the study

process. Be sure to keep an open mind and spend enough time on all viable

options. If only one of the alternatives is defined in detail in a concept

exploration, it creates the appearance that the other alternatives were not

earnestly considered or explored.

A

common pitfall in developing a concept exploration or any trade study

comparison is the premature selection of an alternative early in the study

process. Be sure to keep an open mind and spend enough time on all viable

options. If only one of the alternatives is defined in detail in a concept

exploration, it creates the appearance that the other alternatives were not

earnestly considered or explored.

A number of tools support cost-benefit analysis for ITS projects:

The ITS Costs database

contains estimates that can be used for policy analysis and cost-benefit

analysis. It contains unit cost estimates for more than 200 ITS technologies

as well as system costs for selected ITS deployments. (The unit cost database

is available online and as an Excel spreadsheet at http://www.itscosts.its.dot.gov.)

The ITS Costs database

contains estimates that can be used for policy analysis and cost-benefit

analysis. It contains unit cost estimates for more than 200 ITS technologies

as well as system costs for selected ITS deployments. (The unit cost database

is available online and as an Excel spreadsheet at http://www.itscosts.its.dot.gov.) The ITS Benefits

database contains information regarding the impacts of ITS projects on the

operation of the surface transportation system. The ITS Benefits website

provides an online and Excel spreadsheet version of this database as well as

several other documents pertaining to ITS benefits. (See http://www.itsbenefits.its.dot.gov.)

The ITS Benefits

database contains information regarding the impacts of ITS projects on the

operation of the surface transportation system. The ITS Benefits website

provides an online and Excel spreadsheet version of this database as well as

several other documents pertaining to ITS benefits. (See http://www.itsbenefits.its.dot.gov.) The ITS Deployment

Analysis System (IDAS) is software developed by the Federal Highway

Administration that can be used to estimate the benefits and costs of ITS

investments, which are either alternatives to or enhancements of traditional

highway and transit infrastructure. IDAS can currently predict relative costs

and benefits for more than 60 types of ITS investments. (See http://idas.camsys.com/.)

The ITS Deployment

Analysis System (IDAS) is software developed by the Federal Highway

Administration that can be used to estimate the benefits and costs of ITS

investments, which are either alternatives to or enhancements of traditional

highway and transit infrastructure. IDAS can currently predict relative costs

and benefits for more than 60 types of ITS investments. (See http://idas.camsys.com/.) SCRITS (SCReening for

ITS) is a spreadsheet analysis tool for estimating the user benefits of ITS. It

is intended as a sketch- or screening-level analysis tool for allowing

practitioners to obtain an initial indication of the possible benefits of

various ITS applications. (For more information, see https://www.fhwa.dot.gov/steam/scrits.htm.)

SCRITS (SCReening for

ITS) is a spreadsheet analysis tool for estimating the user benefits of ITS. It

is intended as a sketch- or screening-level analysis tool for allowing

practitioners to obtain an initial indication of the possible benefits of

various ITS applications. (For more information, see https://www.fhwa.dot.gov/steam/scrits.htm.) While

it is best to do a complete analysis of every alternative, sometimes the sheer

number of alternatives makes this thorough approach impractical. One common

practice is to apply the evaluation criteria in stages, weeding out the

alternatives that don't meet the fundamental criteria so that the more

detailed, time-consuming analysis is performed on only a few of the most viable

alternatives. The evaluation should be validated by reviewing the analysis

with stakeholders who may have reasonable objections to certain assumptions and

alternatives.

While

it is best to do a complete analysis of every alternative, sometimes the sheer

number of alternatives makes this thorough approach impractical. One common

practice is to apply the evaluation criteria in stages, weeding out the

alternatives that don't meet the fundamental criteria so that the more

detailed, time-consuming analysis is performed on only a few of the most viable

alternatives. The evaluation should be validated by reviewing the analysis

with stakeholders who may have reasonable objections to certain assumptions and

alternatives.

Remember your audience when writing a feasibility study; this study

makes a business case primarily for a management audience. Any feature of the

study that prevents the reader from assimilating the costs and benefits and the

associated risks of each alternative solution as briefly, completely, and

painlessly as possible reduces the effectiveness of the study for the audience.

Remember your audience when writing a feasibility study; this study

makes a business case primarily for a management audience. Any feature of the

study that prevents the reader from assimilating the costs and benefits and the

associated risks of each alternative solution as briefly, completely, and

painlessly as possible reduces the effectiveness of the study for the audience.

Several review cycles may be required for the feasibility study. First, the document should be circulated among the project team to make sure that there is buy-in. Then an updated draft should be distributed to internal management and other organizations for approval.

The feasibility study establishes the business case for investment in a project by defining the reasons for undertaking the project and analyzing its costs and benefits. Different organizations and different projects will have different requirements, but a feasibility study should contain, at a minimum, the following:

Identification of Alternatives – Transportation Planning Studies

Feasibility studies that examine alternative concepts are frequently done for large transportation projects as part of corridor studies, major investment studies, and environmental analysis reports. The ITS option(s) in these studies often compete with traditional capital improvement options; hybrid options, which include a mix of technology and traditional capital improvements, are also considered.

For example, a congested corridor in Collin County, Texas, was the subject of a feasibility study report (FSR)9 that was prepared by representatives from the North Central Texas Council of Governments and affected agencies. This FSR examined the following alternatives: (1) do nothing, (2) build a new freeway, (3) build a toll road with electronic collection (two alternatives), and (4) build managed lanes. One summary table that compared the traffic volumes supported by the different alternatives is shown in Table 3. Supported traffic volumes, estimated capital costs, and potential revenue generation were used to compare the alternatives. The analysis favored the electronic toll alternatives.

Broad alternatives analyses like these are included in many planning studies.

Table 3: Comparison of Alternatives – Supported Traffic Volumes for 2025

Minnesota DOT developed a guidance document10 for cost-benefit analysis in 2005 that includes several illustrative examples. Generally, higher-level graphics that visually compare the costs and benefits of the alternatives, like the one shown in Figure 11, are used in the body of the cost-benefit analysis. More detailed computation that supports high-level graphics, like the table reproduced in Table 4, is included in appendices.

Figure 11: Example of High-Level Economic Comparison of Alternatives

Table 4: Example of Alternatives Benefit Estimation

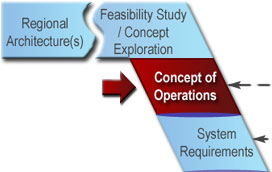

In

this step: The project stakeholders reach a shared

understanding of the system to be developed and how it will be operated and

maintained. The Concept of Operations (ConOps) is documented to provide a

foundation for more detailed analyses that will follow. It will be the basis

for the system requirements that are developed in the next step.

In

this step: The project stakeholders reach a shared

understanding of the system to be developed and how it will be operated and

maintained. The Concept of Operations (ConOps) is documented to provide a

foundation for more detailed analyses that will follow. It will be the basis

for the system requirements that are developed in the next step.

| OBJECTIVES |

|

|---|---|

| INPUT

Sources of Information |

|

| PROCESS

Key Activities |

|

| OUTPUT

Process Results |

|

| Review

Proceed only if you have: |

|

The Concept of Operations (ConOps) is a foundation document that frames the overall system and sets the technical course for the project. Its purpose is to clearly convey a high-level view of the system to be developed that each stakeholder can understand. A good ConOps answers who, what, where, when, why, and how questions about the project from the viewpoint of each stakeholder, as shown in Figure 12.

Figure 12: Concept of Operations (Adapted from ANSI/AIAA-G-043-1992)

In

ITS, we draw a distinction between an Operational Concept, which is the

high-level description of roles and responsibilities that is included in the

regional ITS architecture, and a Concept of Operations, which is the

more detailed, multifaceted document described in this section.

In

ITS, we draw a distinction between an Operational Concept, which is the

high-level description of roles and responsibilities that is included in the

regional ITS architecture, and a Concept of Operations, which is the

more detailed, multifaceted document described in this section.

Don't

assume that a new ConOps is required for every ITS project. A single

system-level ConOps can support many ITS projects that incrementally implement

and extend a system. For example, a ConOps may be developed for a large

transportation management system. This system may be implemented and expanded

with numerous ITS projects over several years. Once the ConOps is developed,

it may be reviewed and used with relatively minor updates for each of the

projects that incrementally implement the transportation management system.

Don't

assume that a new ConOps is required for every ITS project. A single

system-level ConOps can support many ITS projects that incrementally implement

and extend a system. For example, a ConOps may be developed for a large

transportation management system. This system may be implemented and expanded

with numerous ITS projects over several years. Once the ConOps is developed,

it may be reviewed and used with relatively minor updates for each of the

projects that incrementally implement the transportation management system.

Although there is no single recipe for developing a ConOps, successful efforts will include a few key activities:

If

you hire a consultant, don't assume that is the end of your responsibility for

ConOps development. The stakeholders are the foremost experts on their needs

and must be materially involved in the ConOps development. The consultant can

provide technical expertise on what should be in a ConOps, facilitate the

meetings and outreach activities, prepare the document, and coordinate the

review, but the stakeholders' concept should be documented in the end. The

stakeholders should consider the ConOps their document, not the

consultant's document.

If

you hire a consultant, don't assume that is the end of your responsibility for

ConOps development. The stakeholders are the foremost experts on their needs

and must be materially involved in the ConOps development. The consultant can

provide technical expertise on what should be in a ConOps, facilitate the

meetings and outreach activities, prepare the document, and coordinate the

review, but the stakeholders' concept should be documented in the end. The

stakeholders should consider the ConOps their document, not the

consultant's document.

The

best person to write the ConOps may not be the foremost technical expert on the

proposed system. Stakeholder outreach, consensus building, and the ability to

understand and clearly document the larger picture are key.

The

best person to write the ConOps may not be the foremost technical expert on the

proposed system. Stakeholder outreach, consensus building, and the ability to

understand and clearly document the larger picture are key.

Portions of the ConOps can often be created from existing documents. For example, the regional ITS architecture identifies stakeholder roles and responsibilities that can be used. A feasibility study, project study report, or other preliminary study documentation may provide even more relevant information. A project application form used to support project programming will normally include goals, objectives, and other information that should be reflected in the ConOps for continuity.

Operational

scenarios are an excellent way to work with the stakeholders to define a

ConOps. Scenarios associated with a major incident, a work zone, or another

project-specific situation provide a vivid context for a discussion of the

system's operation. It is common practice to define several scenarios that

cover normal system operation (the "sunny day" scenario) as well as various

fault-and-failure scenarios.

Operational

scenarios are an excellent way to work with the stakeholders to define a

ConOps. Scenarios associated with a major incident, a work zone, or another

project-specific situation provide a vivid context for a discussion of the

system's operation. It is common practice to define several scenarios that

cover normal system operation (the "sunny day" scenario) as well as various

fault-and-failure scenarios.

A System Validation Plan is prepared that defines the consensus validation approach and performance measures. As with the ConOps, all affected stakeholder organizations should formally approve the System Validation Plan at this early stage so that downstream, all will agree on when they can "declare victory" that the new system is the right system. The plan will be finalized during system validation (see Section 4.9.2).

The ConOps should be an approachable document that is relevant to all project stakeholders, including system operators, maintainers, developers, owners/decision makers, and other transportation professionals. The art of creating a good ConOps lies in using natural language and supporting graphics so that it is accessible to all while being technically precise enough to provide a traceable foundation for the requirements document and the System Validation Plan.

The

ConOps is not a requirements document that lists the detailed, testable

requirements for the system, nor is it a design document that specifies the

technical design or technologies to be used. Resist the temptation to

predetermine the solution in the ConOps – you should not unnecessarily preclude

viable options at this early step. You also want to "keep it simple" and

refrain from using formalized, highly structured English that is more suitable

for the requirements and design specifications that follow.

The

ConOps is not a requirements document that lists the detailed, testable

requirements for the system, nor is it a design document that specifies the

technical design or technologies to be used. Resist the temptation to

predetermine the solution in the ConOps – you should not unnecessarily preclude

viable options at this early step. You also want to "keep it simple" and

refrain from using formalized, highly structured English that is more suitable

for the requirements and design specifications that follow.

Done right, the ConOps will be a living document that can be revised and amended so that it continues to reflect how the system is really operated. Later in the life cycle, an up-to-date ConOps can be used to define changes and upgrades to the system.

Two different industry standards provide suggested outlines for Concepts of Operations: ANSI/AIAA-G-043-1992 and IEEE Std 1362-1998, as shown in Figure 13. Both outlines include similar content, although the structure of the IEEE outline lends itself more to incremental projects that are upgrading an existing system or capability. The ANSI/AIAA outline is focused on the system to be developed, so it may lend itself more to new system developments where there is no predecessor system. Successful ConOps have been developed using both outlines. Obtain a copy of both, and make your own choice if you need to develop a ConOps.

Figure 13: Industry-Standard Outlines for Concept of Operations

Graphics should be used to highlight key points in the ConOps. At a minimum, a system diagram that identifies the key elements and interfaces and clearly defines the scope of the project should be included. Tables and graphics can also be a very effective way to show key goals and objectives, operational scenarios, etc.

The

Rule/Policy requires identification of participating agency roles and

responsibilities as part of the systems engineering analysis for ITS projects.

It also requires that the procedures and resources necessary for operations and

management of the system be defined. These elements are initially defined and

documented for the project as part of the ConOps. In the ANSI/AIAA standard

outline, most of these elements fit under Chapter 3 (User-Oriented Operational

Description). In the IEEE outline, the current system information is included

in Chapter 3 and the proposed system information is in Chapter 5.

The

Rule/Policy requires identification of participating agency roles and

responsibilities as part of the systems engineering analysis for ITS projects.

It also requires that the procedures and resources necessary for operations and

management of the system be defined. These elements are initially defined and

documented for the project as part of the ConOps. In the ANSI/AIAA standard

outline, most of these elements fit under Chapter 3 (User-Oriented Operational

Description). In the IEEE outline, the current system information is included

in Chapter 3 and the proposed system information is in Chapter 5.

The System Validation Plan that is created during this step should describe how the final system will be measured to determine whether or not it meets the original intent of the stakeholders as described in the ConOps. (For further details and examples, see Section 4.9.)

Many Concepts of Operations have been generated for all types of ITS projects in the last five years. Excerpts from a few examples are included here to show some of the ways that key elements of the ConOps have been documented for ITS projects following the sequence from the ANSI/AAIA outline.

User-Oriented Operational Description (Roles and Responsibilities)

Typically, roles and responsibilities are documented as a list or in tabular form. Table 5 is an excerpt of a table from the California Advanced Transportation Management System (CATMS) ConOps that is structured to show shared responsibilities and to highlight coordination points between the different system stakeholders. This early documentation of "who does what" grabs the stakeholders' attention and supports development of system requirements and operational agreements and procedures in future steps.

Table 5: Roles and Responsibilities (Excerpt from CATMS Concept of Operations)

System Overview

The system overview is typically supported by one or more diagrams that show the scope, major elements, and interrelationships of the system. Many types of diagrams can be used, from simple block diagrams to executive-level graphics-rich diagrams. Figure 14 is an example of a high-level graphic that includes basic process flow information, roles and responsibilities, and interfaces, providing an "at a glance" overview of the major facets of the system.

Figure 14: Example of System Overview Graphic

(from Communicating with the Public Using ATIS During Disasters Concept of Operations)

Operational Scenarios

In operational scenarios, the ConOps takes the perspective of each of the stakeholders as different scenarios unfold that illustrate major system capabilities and stakeholder interactions under normal and stressed (e.g., failure mode) circumstances. The stakeholders walk through the scenario and document what the agencies and system would do at each step.

Figure 15 shows an example of a scenario that includes some realistic detail that help stakeholders immerse themselves in the scenario and visualize system operation. This is one of five scenarios that were developed for the City of Lincoln StarTRAN AVL system to show the major system capabilities and the interactions between the AVL system and its users and other interfacing systems.

Figure 15: Operational Scenario Description11

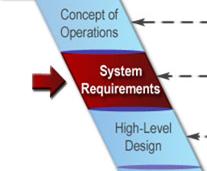

In

this step: The stakeholder needs identified in the Concept

of Operations are reviewed, analyzed, and transformed into verifiable

requirements that define what the system will do but not how the

system will do it. Working closely with stakeholders, the requirements are

elicited, analyzed, validated, documented, and baselined.

In

this step: The stakeholder needs identified in the Concept

of Operations are reviewed, analyzed, and transformed into verifiable

requirements that define what the system will do but not how the

system will do it. Working closely with stakeholders, the requirements are

elicited, analyzed, validated, documented, and baselined.

| OBJECTIVES |

|

|---|---|

| INPUT

Sources of Information |

|

| PROCESS

Key Activities |

|

| OUTPUT

Process Results |

|

| Review

Proceed only if you have: |

|

One of the most important attributes of a successful project is a clear statement of requirements that meet the stakeholders' needs. Unfortunately, creating a clear statement of requirements is often much easier said than done. The initial list of stakeholder needs that are collected will normally be a jumble of requirements, wish lists, technology preferences, and other disconnected thoughts and ideas. A lot of analysis must be performed to develop a good set of requirements from this initial list.

Figure 16: Requirements Engineering Activities

EIA-63212

defines requirement as "something that governs what, how well, and under

what conditions a product will achieve a given purpose." This is a good

definition because it touches on the different types of requirements that must

be defined for a project. Functional requirements define "what" the system

must do, performance requirements define "how well" the system must perform its

functions, and a variety of other requirements define "under what conditions"

the system must operate. Requirements engineering covers all of the

activities needed to define and manage requirements that are shown in Figure 16.

EIA-63212

defines requirement as "something that governs what, how well, and under

what conditions a product will achieve a given purpose." This is a good

definition because it touches on the different types of requirements that must

be defined for a project. Functional requirements define "what" the system

must do, performance requirements define "how well" the system must perform its

functions, and a variety of other requirements define "under what conditions"

the system must operate. Requirements engineering covers all of the

activities needed to define and manage requirements that are shown in Figure 16.

Specify

What, Not How. Be sure to keep the definition of a requirement in mind as

you develop your system requirements. Many requirements documents contain

statements that are not requirements. One of the most common pitfalls is to

jump to a design solution and then write "requirements" that define how the

system will accomplish its functions. Specify what the system will do in the

system requirements, and save how the system will do it for the system design

step.

Specify

What, Not How. Be sure to keep the definition of a requirement in mind as

you develop your system requirements. Many requirements documents contain

statements that are not requirements. One of the most common pitfalls is to

jump to a design solution and then write "requirements" that define how the

system will accomplish its functions. Specify what the system will do in the

system requirements, and save how the system will do it for the system design

step.

It is important to involve stakeholders in requirements development. Stakeholders may not have experience in writing requirements statements, but they are the foremost experts concerning their own requirements. The project requirements ultimately are the primary formal communication from the system stakeholders to the system developer. The project will be successful only if the requirements adequately represent stakeholders' needs and are written so they will be interpreted correctly by the developer.

In the

effort to get stakeholders involved, make sure you don't sour them on the

project by making unreasonable demands on their time or putting them in

situations where they can't contribute. Many nontechnical users have been

subjected to stacks of detailed technical outputs that they can't productively

review. Sooner or later, the user will wave the white flag in this situation

and become unresponsive. You must (1) pick your stakeholders carefully and (2)

make participation as focused and productive as possible.

In the

effort to get stakeholders involved, make sure you don't sour them on the

project by making unreasonable demands on their time or putting them in

situations where they can't contribute. Many nontechnical users have been

subjected to stacks of detailed technical outputs that they can't productively

review. Sooner or later, the user will wave the white flag in this situation

and become unresponsive. You must (1) pick your stakeholders carefully and (2)

make participation as focused and productive as possible.

The

Requirements step is an important one that you shouldn't skimp on. Every ITS

project should have a documented set of requirements that are approved and

baselined. Of course, this doesn't mean that a new requirements specification

must be written from scratch for every project. Projects that enhance or

extend an existing system should start with the existing system requirements.

This doesn't have to be a particularly large document for smaller ITS

projects. The system requirements specification for a recent website

development project was less than 20 pages.

The

Requirements step is an important one that you shouldn't skimp on. Every ITS

project should have a documented set of requirements that are approved and

baselined. Of course, this doesn't mean that a new requirements specification

must be written from scratch for every project. Projects that enhance or

extend an existing system should start with the existing system requirements.

This doesn't have to be a particularly large document for smaller ITS

projects. The system requirements specification for a recent website

development project was less than 20 pages.

There isn't one "right" approach for requirements development. Different organizations develop requirements in different ways. Even in the same organization, the requirements development process for a small ITS project can be much less formal than the process for the largest, most complex ITS projects. The differences are primarily in the details and in the level of formality. All requirements development processes should involve elicitation, analysis, documentation, validation, and management activities. Note that each of these activities is highly iterative. In the course of a day, a systems engineer may do a bit of each of the activities as a new requirement is identified, refined, and documented.

Elicit

and elicitation are words you may not run into every day. Elicit means

to draw forth or to evoke a response. This is the perfect word to use in this

case because you will have to do some work to draw out the requirements from

the stakeholders and any existing documentation. More work is implied by

"elicit requirements" than if we said "collect requirements" or even "identify

requirements", and this is intended.

Elicit

and elicitation are words you may not run into every day. Elicit means

to draw forth or to evoke a response. This is the perfect word to use in this

case because you will have to do some work to draw out the requirements from

the stakeholders and any existing documentation. More work is implied by

"elicit requirements" than if we said "collect requirements" or even "identify

requirements", and this is intended.

Make

sure that you have the right stakeholders involved. This means not only the

right organizations but also the right individuals within them. For example,

it isn't enough to engage someone from the maintenance organization – it should

be an electrical maintenance person who has experience with ITS equipment

maintenance for ITS projects. Furthermore, as we move through the steps in the

process and the products become more technical, different stakeholders may be

involved. Managers may be more involved in the Concept of Operations, while

technical staff will be more involved in review of the system requirements and

high-level design. Finding individuals with the right combination of knowledge

of current operations, vision of the future system, and time to invest in

supporting requirements development is one of the key early challenges in any

requirements development effort.

Make

sure that you have the right stakeholders involved. This means not only the

right organizations but also the right individuals within them. For example,

it isn't enough to engage someone from the maintenance organization – it should

be an electrical maintenance person who has experience with ITS equipment

maintenance for ITS projects. Furthermore, as we move through the steps in the

process and the products become more technical, different stakeholders may be

involved. Managers may be more involved in the Concept of Operations, while

technical staff will be more involved in review of the system requirements and

high-level design. Finding individuals with the right combination of knowledge

of current operations, vision of the future system, and time to invest in

supporting requirements development is one of the key early challenges in any

requirements development effort.

There

are many techniques for working with stakeholders to get to the fundamental

requirements of the system. The Florida SEMP13

highlights one of the best and simplest techniques – the "Five Whys" – that was

popularized by Toyota in the 1970s. Using the Five Whys technique, you look at

an initially stated need and ask "Why?" repeatedly, not unlike a curious

four-year-old, until you find the real underlying requirements. The dialog in Table

6 is an example that is based on an actual conversation.

There

are many techniques for working with stakeholders to get to the fundamental

requirements of the system. The Florida SEMP13

highlights one of the best and simplest techniques – the "Five Whys" – that was

popularized by Toyota in the 1970s. Using the Five Whys technique, you look at

an initially stated need and ask "Why?" repeatedly, not unlike a curious

four-year-old, until you find the real underlying requirements. The dialog in Table

6 is an example that is based on an actual conversation.

Table 6: The "Five Whys" Technique in Action

| Stakeholder | Systems Engineer |

|---|---|

| I need irrigation channels on my keyboard. | Why? |

| I occasionally spill coffee on the keyboard. | Why? |

| I need to have three or four manuals open to operate the system and the coffee just gets knocked over. | Why do you need to have three or four manuals open? |

| ... | ... |

| The dialog continues as the systems engineer discovers several different underlying needs that will drive environmental requirements, human factors/workspace requirements, and user interface requirements, all by pursuing the initial stated need for "irrigation channels". | |

Of course, you sometimes need to direct the conversation by asking more than "why" to use this technique effectively. In the example, the conversation could easily have veered off to a discussion of the user's love for Starbucks coffee. Five iterations is a good rule of thumb, but it may take fewer or more iterations – the idea is to be persistent until you get to the core issues. Note also that the dialog can be internal – the stakeholder could have sat down and asked herself "Why", using the same technique to get at her underlying needs.

As

you gather the requirements, be sure to look beyond the operational

requirements for the system and cover the complete life cycle (system

development, deployment, training, transition, operations and maintenance,

upgrades, and retirement) as well as requirements such as security and safety.

More than one ITS project has failed because the security requirements of

public safety stakeholders were not captured and reflected in the ITS project

requirements. A good system requirements template can be used as a checklist

to help ensure that all types of requirements are considered.

As

you gather the requirements, be sure to look beyond the operational

requirements for the system and cover the complete life cycle (system

development, deployment, training, transition, operations and maintenance,

upgrades, and retirement) as well as requirements such as security and safety.

More than one ITS project has failed because the security requirements of

public safety stakeholders were not captured and reflected in the ITS project

requirements. A good system requirements template can be used as a checklist

to help ensure that all types of requirements are considered.

The

best way to start writing requirements is to use just two words: a verb and a

noun. For example, the user requirement "monitor road weather conditions"

would yield system requirements such as "shall detect ice", "shall monitor wind

speed", and "shall monitor pavement temperature". Performance requirements

would define the different kinds of ice conditions and the range of wind speeds

and pavement temperatures.

The

best way to start writing requirements is to use just two words: a verb and a

noun. For example, the user requirement "monitor road weather conditions"

would yield system requirements such as "shall detect ice", "shall monitor wind

speed", and "shall monitor pavement temperature". Performance requirements

would define the different kinds of ice conditions and the range of wind speeds

and pavement temperatures.

Requirements

are normally defined in a requirements hierarchy in which the highest-level

"parent" requirements are supported by more detailed "child" requirements. A

hierarchy allows you to start with high-level requirements and work your way

down to the details. The highest-level requirements should trace to

stakeholder needs in the Concept of Operations. A hierarchy is a useful

organizational structure that makes it easier to write and review requirements

and to manage the requirements development activity. An example of a

requirements hierarchy is given in Figure 17.

Requirements

are normally defined in a requirements hierarchy in which the highest-level

"parent" requirements are supported by more detailed "child" requirements. A

hierarchy allows you to start with high-level requirements and work your way

down to the details. The highest-level requirements should trace to

stakeholder needs in the Concept of Operations. A hierarchy is a useful

organizational structure that makes it easier to write and review requirements

and to manage the requirements development activity. An example of a

requirements hierarchy is given in Figure 17.

Figure 17: Example of Hierarchy of High-Level and Detailed Requirements

For larger systems, it can be

very difficult to "get your arms around" all of the requirements. Requirements

modeling tools provide a graphic way to define requirements so that they are

easier to understand and analyze. These tools are particularly useful for more

complex ITS projects.  There are

numerous requirements modeling tools and techniques available that can help you

model the system as part of the analysis process. INCOSE maintains a data

repository of available modeling tools that is available on its website14.

There are

numerous requirements modeling tools and techniques available that can help you

model the system as part of the analysis process. INCOSE maintains a data

repository of available modeling tools that is available on its website14.

A

model is a representation of something else. There are physical models,

like the scale model of a train, and more abstract models, like an

architectural plan for a new building. Many different models of the system to

be built can be created and used as part of the systems engineering process.

During requirements analysis, logical models are used that describe what the

system will do. Later, during system design, physical models are created that

show how the system will be implemented.

A

model is a representation of something else. There are physical models,

like the scale model of a train, and more abstract models, like an

architectural plan for a new building. Many different models of the system to

be built can be created and used as part of the systems engineering process.

During requirements analysis, logical models are used that describe what the

system will do. Later, during system design, physical models are created that

show how the system will be implemented.

Requirements modeling is an iterative process. Draft models can be developed early in the process based on the Concept of Operations and the regional ITS architecture. These models are refined as they are used to support requirements elicitation and walkthroughs, keeping bounds on the system and reducing requirements creep.

The

requirements documentation should include more than requirements. There are

many different attributes that should be tracked for each requirement.

A rich set of attributes is particularly important for large, complex

projects. If you are developing such a project, consider specifying the following

for each requirement: requirement number, source, author, creation date,

change history, verification method, priority, and status. The historical and

change-tracking attributes are particularly important since they allow

management to measure and track requirements stability.

The

requirements documentation should include more than requirements. There are

many different attributes that should be tracked for each requirement.

A rich set of attributes is particularly important for large, complex

projects. If you are developing such a project, consider specifying the following

for each requirement: requirement number, source, author, creation date,

change history, verification method, priority, and status. The historical and

change-tracking attributes are particularly important since they allow

management to measure and track requirements stability.

Traceability is another important aspect of requirements documentation. Each requirement should trace to a higher-level requirement, a stakeholder need, or other governing rules, standards, or constraints from which the requirement is derived. As the system is developed, each requirement will also be traced to the test case that will verify it, to more detailed "child" requirements that may be derived from it, and to design elements that will help to implement it. Establish and populate the Traceability Matrix at this stage, and continue to populate it during development. The Traceability Matrix is a vital document that is maintained to the end of project development, allowing traceability from user needs to the system components, verification, and validation.

You

will see "validation" used in a few different contexts in systems engineering.

Here in requirements validation, you make sure that the requirements are

correct and complete. Later, in system validation (discussed in Section

4.9), you make sure that you have built the right system. In fact, the

requirements validation that is performed here will ultimately help to make

sure that the system validation is successful in the end.

You

will see "validation" used in a few different contexts in systems engineering.

Here in requirements validation, you make sure that the requirements are

correct and complete. Later, in system validation (discussed in Section

4.9), you make sure that you have built the right system. In fact, the

requirements validation that is performed here will ultimately help to make

sure that the system validation is successful in the end.

A

walkthrough is a technique in which a review team steps through a deliverable

(e.g., requirements, design, or code) looking for problems. A walkthrough

should be relatively informal and "blame free" to maximize the number of

problems that are identified. A requirements walkthrough should be attended by

the people that have a vested interest in the requirements. For a large

project, this might include the requirements author, customer, user

representative(s), implementers, and testers.

A

walkthrough is a technique in which a review team steps through a deliverable

(e.g., requirements, design, or code) looking for problems. A walkthrough

should be relatively informal and "blame free" to maximize the number of

problems that are identified. A requirements walkthrough should be attended by

the people that have a vested interest in the requirements. For a large

project, this might include the requirements author, customer, user

representative(s), implementers, and testers.

Table 7 identifies an oft-repeated list of attributes of good requirements. As part of the validation process, you do your best to make sure that the requirements have all of these desired attributes. Unfortunately, computers can do only a fraction of this validation and people have to do the rest. Techniques for validating a requirement against each of these quality attributes are also shown in Table 7. An attribute list like this can be converted into a checklist that prompts reviewers to ask themselves the right questions as they are reviewing the requirements.

Table 7: Validating Quality Attributes of Good Requirements

Table 7: Validating Quality Attributes of Good Requirements

| Quality Attribute | Validate by: |

|---|---|

| Necessary | Make sure that each requirement traces to either a stakeholder need in the ConOps or a parent requirement. A computer can check that the traceability is complete, but people have to verify that the identified traces are valid. |

| Clear | Some requirements management tools can help with this by looking for red-flag words and constructs in the requirements (e.g., "user friendly", "optimum", "real-time", pronouns, and complex sentences). Most of this aspect of validation relies on walkthroughs and other reviews to make sure the requirements aren't subject to different interpretations. The main culprit here is ambiguity in the English language. |

| Complete | Does every stakeholder or organizational need in the ConOps trace to at least one requirement? If you implement all of the requirements that trace to the need, will the need be fully met? A computer can answer the first question, but only stakeholder(s) can answer the second. |

| Correct | In general, it takes a walkthrough to verify that the requirements accurately describe the functionality and performance that must be delivered. The stakeholders must validate that the highest-level system requirements are correct. Traceability can assist in determining the correctness of lower-level requirements. If a child requirement is in conflict with a parent requirement, then either the parent or the child requirement is incorrect. |

| Feasible | Again, this must be determined by review and analysis of the requirements. A computer can help with the analysis and possibly even flag words like "instant" or "instantaneous" that may be found in infeasible requirements, but a person ultimately makes the judgment of whether the requirements are feasible. In this case, it is the developer who can provide a reality check and identify requirements that may be technically infeasible or key cost drivers early in the process. Since system performance is dependent on system design and technology choices, requirements feasibility will continue to be monitored and addressed as the system design is developed. |

| Verifiable | Does the requirement have a verification method assigned? (This is something a computer can check.) Is the requirement really stated in a way that is verifiable? (This much more difficult check can only be performed by people.) For example, ambiguous requirements are not verifiable. |

Every

ITS project should have a tool that helps to manage the requirements baseline.

More complex ITS

projects will benefit from a tool specifically for requirements management such

as DOORS or Requisite-Pro.15

A professional requirements management tool is expensive, but it includes a

long list of capabilities including change management, requirements attributes

storage and reporting, impact analysis, requirements status tracking,

requirements validation tools, access control, and more.

Every

ITS project should have a tool that helps to manage the requirements baseline.

More complex ITS

projects will benefit from a tool specifically for requirements management such

as DOORS or Requisite-Pro.15

A professional requirements management tool is expensive, but it includes a

long list of capabilities including change management, requirements attributes

storage and reporting, impact analysis, requirements status tracking,

requirements validation tools, access control, and more.

Like the other requirements

engineering activities, the requirements management capabilities should be

scaled based on the complexity and size of the ITS project. Requirements for

smaller ITS projects can be managed easily and effectively by a single engineer

using a general purpose tool like Microsoft Access or Excel.

Like the other requirements

engineering activities, the requirements management capabilities should be

scaled based on the complexity and size of the ITS project. Requirements for

smaller ITS projects can be managed easily and effectively by a single engineer

using a general purpose tool like Microsoft Access or Excel.

No matter how you developed your requirements, you must document them in some consistent, accessible, and reviewable way. The requirements development process may result in several different levels of requirements over several steps in the "V" – stakeholder requirements, system requirements, subsystem requirements, etc. – that may be documented in several different outputs. For example, stakeholder requirements might be documented in a series of Use Cases; system requirements, in a System Requirements Specification; and subsystem requirements, in subsystem specifications. All of these requirements should be compiled in a single repository that can be used to manage and publish the requirements specifications at each stage of the project.

It is much

easier to use a standard template for the requirements specifications than it

is to come up with your own, and numerous standard templates are available. If

your organization does not have a standard requirements template, you can start

with a standard template like the one contained in IEEE Standard 830 (for

software requirements specifications) or IEEE Standard 1233 (for system

requirements specifications). Starting with a template saves time and ensures

that the requirements specification is complete. Of course, the template can

be modified as necessary to meet the needs of the project.

It is much

easier to use a standard template for the requirements specifications than it

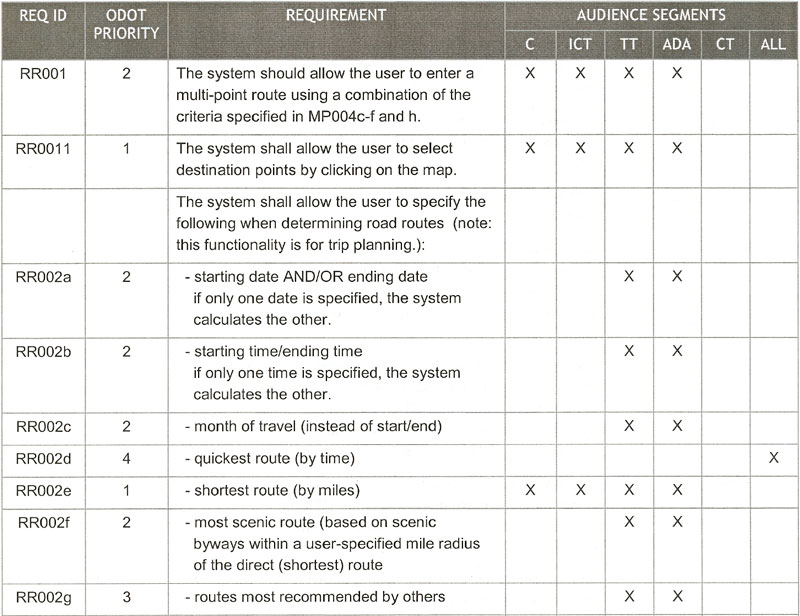

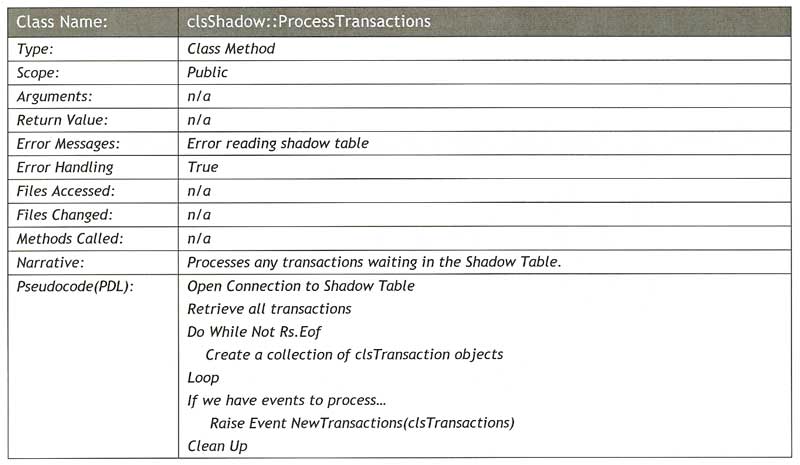

is to come up with your own, and numerous standard templates are available. If